[HOLD – Stub only …]

Yesterday Philip Ball posted this quip:

And today’s demonstration that cells are not machines is… https://t.co/KZLbGKhE6B

— Philip Ball (@philipcball) February 16, 2023

And then this was liked by Kevin Mitchell:

all talk of “AI becoming conscious” is either based on a complete misunderstanding of AI (and consciousness) or pure hype… or both…

— Abeba Birhane (@Abebab) February 18, 2023

And Philip also tweeted these, liked by Kevin:

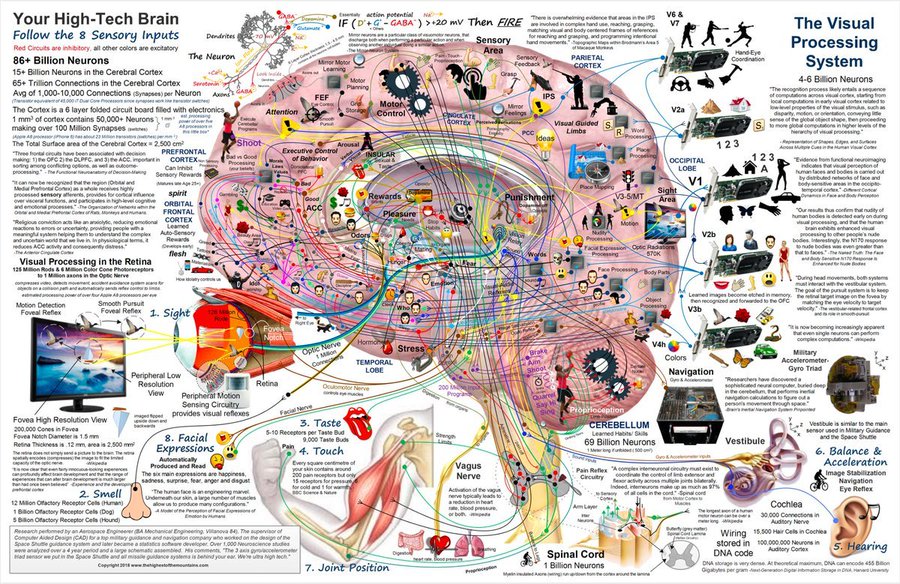

The great thing about these images of how the brain works is that they show us this is not the way to understand how the brain works. (See also: the cell.) pic.twitter.com/crigNTiPKX

— Philip Ball (@philipcball) July 8, 2022

Hope it’s no letdown to tell you all that I never meant this particular image to be taken too seriously. It’s from a loopy source that also included this image, prompting a nicely sardonic comment from @PessoaBrain. BUT… pic.twitter.com/ecxoEeAN0K

— Philip Ball (@philipcball) July 9, 2022

I do think there is some value in mapping brain connectivity, of course. But that crazy diagram was a sort of caricature of where you can end up if you get too literal about it. The same applies to the mapping of biochemical or genetic interactions networks in cells…

— Philip Ball (@philipcball) July 9, 2022

And after @JustinCaouette shared this Templeton piece by Marcus Arvan a few days ago,

I was prompted to respond:

I’m not saying it’s wrong, haven’t digested what he is saying yet. Just responding to the “click-bait” question in the headline 🙂

(We’re “computers” and at root all information is “digital”. My response said “we”.)

I can give you a longer response on “artificial” computers.

— Ian Glendinning (@psybertron) February 9, 2023

I skim read the piece and added:

2/2 Even in digital computers the categorical variables (voltages and sensor inputs) are analogue and it’s the switches and control circuits that interpret digital “decisions”. Same in brains/minds 🙂

Anyway – long story – told best by @Mark_Solms

— Ian Glendinning (@psybertron) February 9, 2023

What this article doesn’t recognise is that our awareness of a quality is in the context of our valenced state space, in which our possible actions.. selected to optimise our future valence… are steps to different valenced states. It is this valenced terrain that we feel.

— Peter Martin (@PeterMa88010875) February 17, 2023

And for good measure:

If you think the AI is sentient, you just failed the Turing Test from the other side.

— Naval (@naval) February 18, 2023

[This is just a stub for elaboration about biological (living, universal Turing) machines … Suffice to say, the claims to be pointing out misunderstandings are themselves misunderstandings]

And like my previous post …

it’s about properly recognising “complexity”.